Introduction

I've been passionate about arts and technology from a young age, practicing visual art and playing various musical instruments in my youth, then developing a love of electronic music, digital art, and programming as a teen. In college I studied audio production and recording engineering, with a focus on music and sound design for interactive media, and developed websites on the side.

After freelancing in the audio industry for several years, I decided to broaden my programming abilities with a Computer Science degree, specializing in web / mobile development, computer graphics, and interactive media.

Currently I work as a software developer in Victoria BC, producing bass-heavy electronic music in my spare time.

Please take a look through some samples of my MUSIC, VIDEOS, ART, WEBSITES, APPS, and ESSAYS below.

Music Catalog

Experiments (2009)

A collection of tracks I produced while learning the tools of music production

Experiments Demos

A collection of pre-2009 demos that did not make it into the album

Recent Productions

An assortment of post-2009 unreleased tracks

Recent Demos

A collection of my more recent post-2009 demos

Audio Post-Production

Mirror's Edge

Sound Effects Replacement I replaced all of the original sound effects with a combination of personal recordings and modified library samples.

Mirror's Edge Trailer

Music replacement project I replaced the official music with an original composition.

Hunted: The Demon's Forge Trailer

Music replacement project I replaced the official music with an original composition.

Revolver

Music replacement project I replaced the official music with an original composition.

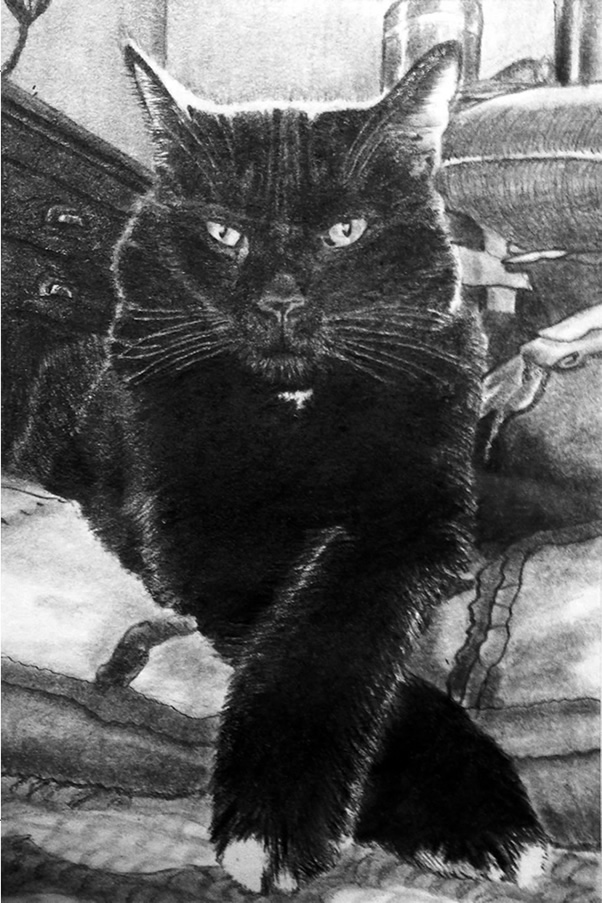

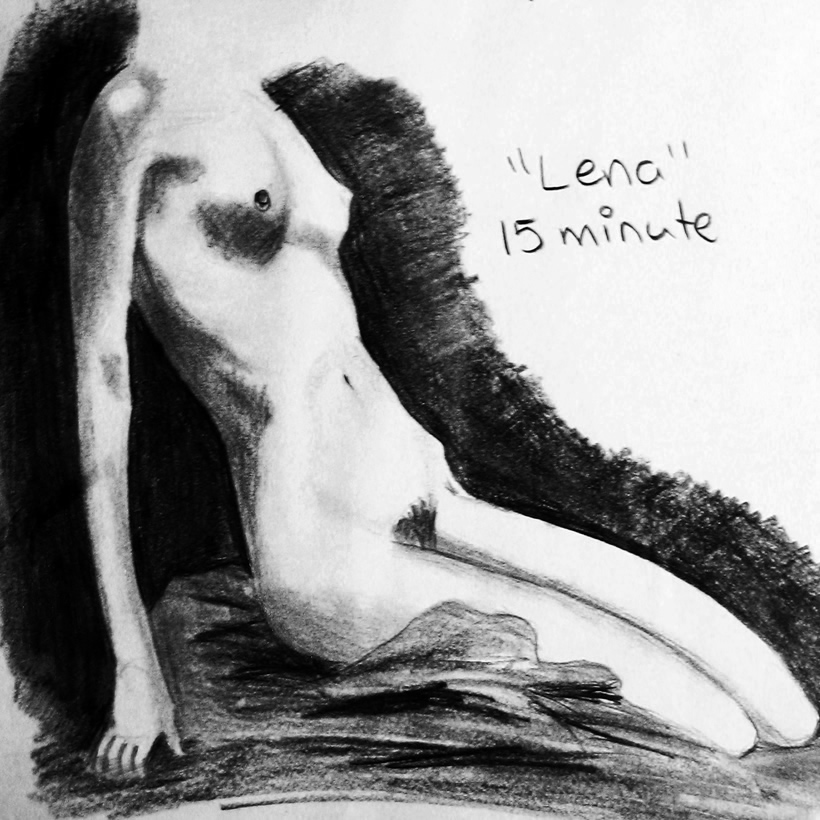

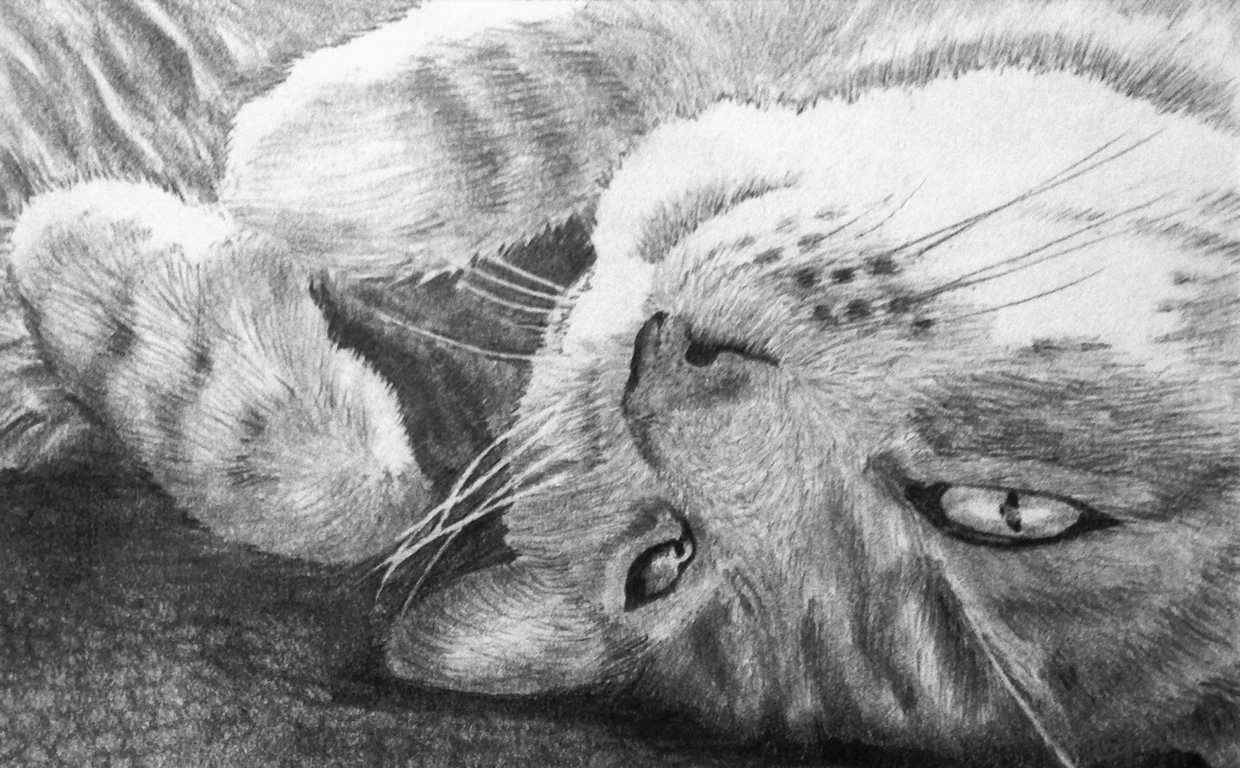

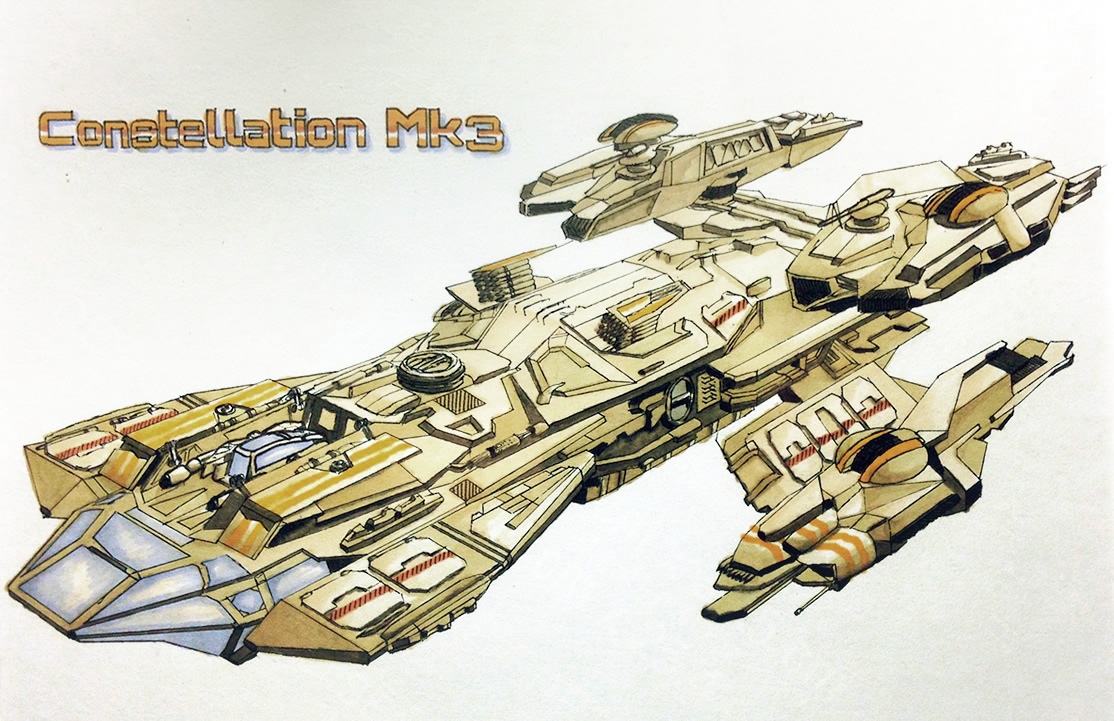

Visual Art

Web Development

Mobile Applications

Essays

Music, Ambience, and Sound Effects in Videogames

Videogames are pushing the boundaries of music composition and sound design. One must build an auditory experience that not only matches, but enhances, the interaction between the player and the game. The rhythm sets the level of excitement, and the blend of chosen sound establishes a particular atmosphere or mood. However in games, events are not predetermined as they are in movies and television. The player moves, and the game reacts. For this reason, music, ambience, and sound effects need adapt to anything the player does and anywhere the player goes.

Rhythm is directly related to the excitement level in music, and this is of key importance to the videogame composer. High excitement music is associated with speed and danger: races, chases, escapes, fights and battles. Low excitement music is associated with calculated movement and safety: puzzles, stealth, roaming, exploration, memories and dreams. Thus, the rhythm and the excitement level of the music increase when the game's pace picks up, or when danger arises.

High excitement music generally has the following characteristics: pronounced drums and percussion, fast tempo (130-180bpm), higher register melodies, dense staccato notes, hard/ loud. Hard rock, punk, electronic, and orchestral music are commonly used to establish a high excitement level. On the other hand, low excitement music generally has these characteristics: minimal drums and percussion, slow tempo (90-110bpm), lower register melodies, sparse legato notes, soft/quiet. Ambient, choral, electronic, and lounge music are commonly used to establish a low excitement level.

Certain genres of games call for particular types of music. Futuristic games almost always have an electronic soundtrack. In futuristic racers, such as Wipeout HD (Fig.1), we hear a lot of techno and house. Electronic music often has a heavy 4/4 pulse, which has a trance-inducing effect on the player, focusing his or her attention on the game. Any horror-type game goes well with slow ominous music, but atonal textures and ambience are also heavily relied on for a creepy mood instead of conventional music. Extreme sports, such as skateboarding, snowboarding, motocross, and bmx, often have popular rock, punk, or top 40 remix playlists. Adventure/RPG music is usually orchestral; this effectively expresses a sense of exploration, or big open spaces.

Every era and region of the world has a unique mixture of sounds which make up its ambience. The elements (water, wind, fire, earth), the living (plants, animals), the man-made (tools, machines, structures), and the supernatural (spirits, energy) are all potential sources of sound in videogames. A dense soundscape would be effective in a jungle setting, brimming with energy, but in a post-apocalyptic world, there needs to be an unsettling silence to the environment. The composer's choice of instrumentation has a huge impact on the vibe of a game. Many games attempt a traditional movie-like experience, with a full orchestra and realistic ambience. Other games strive to go out of this world, and synthesize sounds that have never been heard before. Electronic music suits the latter very well, because electronic instruments are not bound by physical restrictions, and can take on forms that are impossible in the natural world.

An earthy, organic sound palette consisting of hand drums, rattles, insect and animal noises is suited to tribal or pre-historic life. The medieval period is more advanced than the Stone Age, with developments in farming, architecture, and transportation; but it remains somewhat crude, sometime before the industrial revolution. String, wind, and brass instruments are developed, and ambiances may include horse-drawn carts, boats, torches, flags, and wilderness. The next era would be the industrial period, bringing forth the sounds of engines and city life. At this point, nature may not be nearby, and so the soundscape is mostly man-made.

Eventually, electronic devices replace mechanical ones, and the soundscape changes once again. Amplified music is born, and it becomes difficult to escape the constant hum of innumerable machines and devices in the modern-day cityscape: electric lights, air conditioners, engines, fans, and computers. Primary building materials are concrete, steel, and glass, instead of iron, wood, and stone. In the future, there is a merger between biology and technology. Nature is lost, and in its place are metropolises and wastelands. The soundscape of the future is similar to the present modern day, only quieter. That summarizes the changing sounds of eras, so now, regions will be discussed.

Every geographic location in the world has instruments and ambience that are associated with it. The timeline above describes a generic Euro-American civilization, as opposed to an exotic one. Foreign settings include the ocean, deserts, islands, and jungles, as well as alien and spiritual worlds, in which anything can exist. Traditional folk music can be split into many streams: Celtic, Oriental, Indian, Middle Eastern, South and West African, Latin, Australian, Native American, Cajun, Inuit, Polynesian, Caribbean, and Russian. With a little research, suitable instruments can be identified for any of these nations. Ambience should fill in the gaps between music and primary sound effects; it can hint at weather conditions, the balance of nature and technology, or the presence/absence of people. Magic and science fiction are common themes in video games; however, they must have their own sound to designate them as special. As previously mentioned, electronic music and synthesized sounds are great for unnatural situations, because by definition, they are not natural. A portal to another realm radiates magical energy, and the sound designer must use his imagination to discern what magical energy should sound like.

In-game music needs to be composed in multiple layers: at the base, legato tones and percussive subtleties. As actions builds, a thematic melody, bass and more rhythm elements come in. Finally, at the climax of action, the most energetic layer of drums and harmonies enter. Based on the gameplay, additional layers come in or out. When nothing is happening, only ambience and sound effects are heard. During a final battle or chase mission, however, the music should energize the player. Aaron Marks describes this, in his book on game audio:

By design, interactive game music will adapt to the mood of the player and the game setting. Music will be slow and surreal as a player is exploring a new environment. If the game character moves from a walk to a run, the music will also keep pace by increasing in tempo. As danger approaches, the music will shift to increased tension, then to an all-out feeling of dread as the bad guy appears out of the shadows. The player chooses a weapon and begins the attack as the music shifts again to a battle theme to fire the player up. As the player gets hacked to pieces by the bad guy, the music will turn dark- conveying that the end is near. But, as the player gets his second wind and begins a determined counter attack, the music will morph into a triumphant flurry as he imposes death and destruction upon the evil villain. (234)

Two innovative examples of interactive audio are Rez (Fig.2) and Child of Eden (Fig.3), produced by Tetsuya Mizuguchi. The concept behind these games is synesthesia, or cross-sensory experiences. This is achieved in multiple ways: player movements are quantized to the rhythm of the background music; additional musical phrases are triggered when the player locks on to and destroys targets; and, the controller and scenery pulse to the music. This integrates the player's sense of sight, sound and touch, into one seamless experience. Rez was released in 2001, and is less refined than Child of Eden, released ten years later, in 2011. The former makes heavier use of loops, but compared to the latter they aren't arranged in a very musical way. The music in Child of Eden is more elaborately arranged, without compromising any of the cross-sensory effect. A major consideration regarding interactive music is blending game cues into the music, such as when the player wins or loses. Instead of the game music ending before the cue begins, it could resolve in the appropriate way: triumphant or gloomy. The more natural this transition, the better.

Interactive music aside, there are instances in video games when music is composed in a linear manner. Intro sequences, menu screens, cinematics, and credits are common examples.

Sound effects come from a combination of three places: foley recording, sound libraries, and synthesis. Foley is the art of using available materials to record new sounds or textures. Sound libraries are useful when you want to imitate sound from the past, or when you lack the time or resources required for foley recording. Synthesis is the art of producing sound from scratch. Each method has pros and cons, so it is recommended to learn which one to use for different situations. Sound effects are used in videogames much like they are in film: to increase the impact and believability of on-screen events. They also have the potential to affect the emotions of a listener at least as much as music can. Playing on the emotions is thus the secondary purpose of sound effects, after providing auditory feedback for on-screen events. Just like in music, harsh sounds increase tension, and soft sounds promote relaxation. These emotional cues work together with the music and ambience to foreshadow coming events and to underscore current situations.

Foley is ideal in most cases. It is much faster than synthesizing complex sounds and it means that the recordings are always unique. All that is required for foley is a portable recording rig, a microphone, and some creativity. Suitable sounds can come from the most unlikely objects, and making these types of connections is the job of a foley artist. Take a crackling fire for example: you don't need to stick a microphone into a real fire when a similar-enough sound can be made by crumpling paper. This has been done a million times, because when it's heard in the context of a campfire scene, it sounds like a campfire!

However, there are multitudes of sound libraries available online, and they can be a very convenient source of raw sound materials. But according to Marks, "the one major problem with licensed sound libraries is that anyone can have them and some sounds have a tenancy to be overused... The way around this is to creatively manipulate or use small pieces of the original sound to make something new and fresh... For example, a bullet ricochet layered with an explosion gives you an entirely fresh explosion" (277-8). Manipulating and layering elements are the key to making original sounds from unoriginal libraries.

Synthesis is an incredibly vast art form of its own. There are a wide variety of techniques: additive, subtractive, granular, amplitude modulation (AM), ring modulation, frequency modulation (FM), wavetable, and physical modeling synthesis. Modern synthesizers usually incorporate at least two or three of these techniques. Most common are additive and subtractive, followed by AM and FM synthesis. Theoretically speaking, any imaginable sound can be produced by a synthesizer. But in practice, there are limits to what a synth can be used for. Typical applications for a synthesizer include futuristic weapons and vehicles, space travel, robots and mechanical suits, energy fields, teleportation and time travel, spell casting, and psychic phenomena. The majority of organic sounds require a lot more effort to produce convincingly than inorganic sounds, so foley and sound libraries are usually called for in these situations.

Technical specifications and limitations are the first consideration when a sound designer is given a project. The bit-rate and bit-depth of the end product can limit higher frequency details and dynamic range. The speaker arrangement can vary from a full-range 5.1 surround sound setup to a single mono 8mm micro speaker in a cell phone. Being aware of these specifications will greatly improve the sound designer's ability to produce suitable sounds for a project.

Genre is another important factor that influences what sounds are right for a project. Child oriented games can call for simplistic, cheerful or funny sounds, whereas adult oriented games generally require more seriousness and sophistication. As discussed earlier, sound effects can increase tension or promote relaxation. And this is used to our advantage, to give the player the excitement or thrill they desire. In the children's game, this is a gentle thrill, but for the veteran gamers out there, we want to set them up and knock them down, so to speak.

Beyond age demographics, some games aim to emulate real-world situations, like city or helicopter simulators (Tropico Fig.4, Apache Air Assault Fig.5). These games call for lots of field recording and sampling, to help convince the player they are experiencing the real thing. Other games are entirely fantasy based (Where the Wild Things Are Fig.6), for which synthesis and creative foley may be more appropriate.

Getting a proper mix of sound elements is as important in videogames as with any media. In the worst case scenario, the player is bored by the lack of dynamics, or distracted from the game by improperly mixed music and sound effects. In the best case scenario, the player is continuously absorbed in the gameplay because the music and sound effects support the ever-evolving circumstances of the player. Also, priority in the mix must be given to particular types of sounds so that the player doesn't miss any vital information. If there are voice-overs in the game to brief the player on their mission, for example, there must be no competing sounds in that frequency range that could prevent them from understanding their goal. Another scenario is if the player is unlocks a hidden passageway; there should be an audible signal that something in the environment is changing, such as a rumbling stone door, or a magic portal releasing energy as it opens. Once again, there must be space in the mix for such a cue, or else it may not be heard. To prevent this from happening, busy music does not play when the player needs to listen, and ambient noises are low enough in the mix not to distract the player from important sounds.

In conclusion, the gaming experience is at its best when audio supports its dynamic qualities. In music, rhythm sets the level of excitement, instrumentation determines the overall atmosphere, and interactivity enables the mood to change from one situation to the next. Sound effects need to support the style and environment of the game, and provide feedback to the player when something important is happening.

Works Cited

Marks, Aaron. The Complete Guide to Game Audio. 2nd ed. Burlington, MA: Focal, 2009. Print.

Mizuguchi, Tetsuya. Rez. Tokyo: Sega 2001. Dreamcast, Playstation 2, Xbox 360 game.

Mizuguchi, Tetsuya. Child of Eden. Paris: Ubisoft 2001. Xbox 360, Playstation 3 game.

Granular Synthesis Techniques

In the future of video game audio, there will advancements in the implementation of sound effects, ambience, dialog, and music. I predict a decline in the use of samples for these purposes, specifically sound effects and ambience, and increased use of real-time grain-based audio synthesis. This paper will outline a variety of techniques and principles relating to this emerging field and its associated technology.

Additive synthesis operates on the notion that all pitched sounds are made up of interrelated sine waves, also referred to as harmonics, overtones, or partials. The precursors to this type of synthesis are the pipe organ from the mid-fifteenth century, the Telharmonium, developed in 1897, and the Hammond organ, invented in 1934. These devices utilized physical stops, tonewheels, and drawbars to combine harmonics.

In the 1970s, Hal Alles of Bell Labs designed the first digital additive synthesizer, now known as the Alles Machine. Using two 61-key piano keyboards, four 3-axis analog joysticks, a bank of 72 sliders, and various switches, the operator could dynamically mix up to 32 voices and then program a harmonic pattern, filter, and amplifier for each voice. Up to 256 parameters could be controlled on individual envelope generators before the signals are mixed down to four 16-bit output channels (Alles 5-9). The Alles Machine had the capability to produce a diverse range of rich, harmonically complex sounds that were previously impossible to create.

Closely related to additive synthesis are two mathematical operations, known as Fourier analysis, which decomposes a mathematical function (i.e. a sound wave) into its individual frequencies; and Fourier synthesis, which sums a series of frequencies back into a mathematical function. Although the Fourier transform was developed over two hundred years ago, it was not until the development of stored-program computers in the 1940s that it could be performed automatically. Until the mid-1960s, digital Fourier transform required an immense amount of calculations, and computers were not able to perform it very efficiently. A set of algorithms known as the fast Fourier transform was then developed by James Cooley at Princeton University and John Tukey at Bell Labs (Roads 244). With this development, the Fourier transform made possible a powerful new technique called Granular synthesis.

A grain of sound is a microacoustic event, with duration near the threshold of human auditory perception, typically between 1 and 100 milliseconds (Roads 86). British physicist Dennis Gabor first proposed the breaking up of the audio spectrum into grains in 1947 (Manning 391). This idea was later explored by manipulating and compositing bits of sound on magnetic tape, a technique that became known as musique concrète, but it wasn't until the mid-1970s that the true potential of granular synthesis could be explored with the use of computers. This is because it typically takes a combination of thousands of grains to make up a larger acoustic event known as a cloud, and computer algorithms are required to have any reasonable amount of control over so many bits of information.

Individual grains are generated by wavetable oscillators, controlled by an amplitude envelope, and routed to a spatial location. Thus, the basic variables in granular synthesis are (1) the waveform initially used, (2) the grain's envelope and duration, and (3) the spatial positioning of grains. Subtle changes to these variables tend to have major effects on the resulting sound as a whole, as described here by Curtis Roads: "As a general principle of synthesis, we can trade off instrument complexity for score complexity. A simple instrument suffices, because the complexity of the sound derives from the changing combinations of many grains. Hence we must furnish a massive stream of control data for each parameter of the instrument." Examples of this include making changes to grain duration, waveform, frequency band, density / fill factor, and spatial location; which I will now explain in greater detail:

The bell-shaped Gaussian curve is the smoothest and simplest envelope. The QuasiGaussian curves have an identical attack and decay section but longer sustain time, which narrows the harmonic spectrum of the grain. Angular envelopes, made of vector paths with no curve, produce a similar frequency response to Gaussian curves, with a spectral trough for each corner in the envelope. Expodec envelopes are shaped like an impulse response, decaying at an exponential rate. Rexpodec envelopes are the opposite, increasing at an exponential rate, and are perceived as though the grain is being played in reverse. Interestingly, symmetrical grains, as well as clouds made up of them, can be played backwards with no perceived change in tonal qualities.

There are four methods of calculating grain duration: constant, in which every grain is equal in duration; time-varying, in which grain duration changes over time; random, in which grain duration is randomized within defined range; and parameter-dependent, in which grain duration depends on another parameter, such as its fundamental frequency period.

If a cloud is composed of grains that all share a common waveform, it is described as monochrome. If the grains alternate between multiple waveforms, the cloud is polychrome. If the grains contain a waveform that evolves over a span of time, the cloud is transchrome.

The fundamental frequencies of grain waveforms can be controlled in two ways. In cumulus clouds, the frequencies are quantized to specified notes or a scale; whereas in stratus clouds, they are scattered evenly within frequency band parameters. Partial quantization in clouds results in a subtle transition between the harmonic cumulus sound and non-harmonic stratus sound. Glissando can be achieved by quantizing grains to frequencies that change over time. Also, the frequency spectrum can be broadened, narrowed, raised, lowered, or any combination thereof, by modulating the cloud's upper and lower frequency boundaries.

The density of a cloud refers to the number of grain per second. The combination of density and grain duration make up the texture of the cloud, which can be described by its fill factor (FF). A fill factor value is calculated by multiplying the number of grains per second by the grain duration in seconds: FF = density × duration. The end product will express the following: if FF is <0.5, the cloud is less than half populated; if FF is 1, the cloud is on average fully populated; if the FF is >2, the cloud is densely populated by more than two layers of overlapping grains. If the grains are being generated at an irregular rate, a fill factor of 2 is recommended for the perception of a solid cloud, without any intermittency. High densities can create different effects depending on the frequency bandwidth. Within narrow bands (one semitone or less), high densities produce pitched streams with formant spectra; in moderatewidth bands (several semitones), high densities produce swelling, turbulent sounds; and over wide bands (an octave or more), high densities generate massive walls of intricately evolving sound.

The more output channels that a cloud is spread over, the more possibilities there are for shaping it in multiple dimensions. Stereo clouds are much richer than monaural clouds, and surround channels expand on stereo image to create a three dimensional sense of envelopment. In experimental sound installations, many more than 5.1 channels are utilized; a truly immersive and interactive environment could be constructed with assortment of speakers positioned throughout a room.

According to Roads (91), there are six primary methods of organizing grains, which can be modified or used in combination: (1) Spectrogram matrix analysis, (2) Pitch-synchronous overlapping steams, (3) Synchronous and quasi-synchronous streams, (4) Asynchronous clouds, (5) Physical or abstract models, and (6) Granulation of sampled sound:

The first method is based on the Gabor matrix. It converts amplitude-over-time data (a waveform) into spectral-content-over-time data (a spectrogram), applies a two dimensional matrix (time and frequency) to quantize the data into cells, then measures the energy content of each cell. The second method is designed for generating pitched sounds, and begins with a spectrogram matrix analysis. Each cell along the y-axis corresponds to a specific fundamental frequency, and based on the corresponding period length, as determined by a pitch detection algorithm, the grain is triggered that rate. This enables a very smooth transition from one grain to another on every frequency. In the third method, grains are triggered at a regular interval, unconnected to the period length of frequency. Grains triggering at a rate of 50 to 1000 milliseconds (1-20 Hz) are perceived rhythmically, whereas higher rates are perceived as continuous tones. The combination of the waveform frequency, the grain frequency, and the grain envelope create harmonic sidebands which "may sound like separate pitches or they may blend onto a formant peak. At certain settings, these tones exhibit a marked vocal-like quality" (Roads 93).

The grain frequency can also incorporate an amount of randomization. No randomization is used in synchronous synthesis, some is used in quasi-synchronous synthesis, and complete randomization is used in the fourth method, which scatters grains to form asynchronous clouds. The grains are distributed evenly within a set of boundaries defined by the composer. The fifth method is based on algorithms that model the physical behavior of acoustic grains in the real world, as produced by an instrument such as a shaker, maraca, tambourine, or guiro; or natural sounds such as rain, waves, leaves, or sand. The Sixth method relies on audio granulation as grain waveform source material, instead of the usual use of oscillators. This is a closely related concept to concatenative synthesis, discussed later on.

In 1987, Barry Truax released the first granular analysis/resynthesis (also known as granulation) software, GSAMX, in which the spectral characteristics of an incoming signal are analyzed in real-time and applied to the grain generator for playback:

The most notable feature of granular synthesis is the ability to explore the inner spectral detail of sounds by stretching their evolution in time from perhaps a fraction of a second to many orders of seconds. The resulting transformation from transient to continuously evolving spectra results in rich new textures that can be projected spatially in a stereo or multichannel environment.

Most modern implementations of granular synthesis focus on analysis and resynthesis functions, as opposed to pure synthesis which was used almost exclusively prior to Truax' development of GSAMX; although synthetic waveforms can still seen in operation from time to time (Roads 117).

To conclude this overview of basic granular synthesis, altering grains on both an individual and collective basis is found to result in a highly complex texture of sound. By layering grains with a variety of waveshapes, fundamental frequencies, envelopes, durations, or spatial locations, the sound gains thick and irregular qualities that cannot be achieved with additive or subtractive synthesis techniques.

In addition to granular synthesis, there are a number of related synthesis techniques based on particles of sound; these variations expand on the concepts that have been discussed so far.

Glisson synthesis is a technique whereby grains themselves are in a constant state of change, with a start position and an end position in the frequency plane. Although glissando only applies to pitch in the literal sense, the practice can also be extended to movement between output channels. There are two parameters unique to this type of synthesis: displacement amount, ranging from subtle to extreme; and directionality, in which grains can travel in a common direction, move in random directions, converge together, or diverge apart. The combination of these two parameters opens up a host of possibilities absent in standard granular synthesis.

Grainlet synthesis is a technique that is based on linking various parameters to one another. Density, amplitude, start-time, frequency, duration, waveform, spatial positioning and reverberation can be interconnected, resulting in algorithmic organization of grains that can only be accomplished in this way.

Trainlet synthesis is performed using acoustic particles called trainlets, which are made up of impulses. Theoretically speaking, an impulse is infinitesimally brief burst of energy with equal energy across the frequency spectrum; but in practice, an impulse has a minimum duration, or pulse width, of one sample, and its actual frequency response is limited to half of the sampling rate. When multiple impulses are activated within close proximity to each other, the spectral image develops a number of comb-shaped troughs, defining harmonics in between them, corresponding to the distance between the impulses.

In conclusion, granular synthesis has a huge potential in videogames, and other interactive media. It is extremely versatile, and can be used to emulate natural acoustic occurrences, or to realize fantastical soundscapes.

Works Cited

Alles, Hal. "A Portable Digital Sound Synthesis System", Computer Music Journal, Volume 1 Number 3 (Fall 1976), pg. 5-9

Helmholtz, Hermann von, and Alexander John Ellis. "The Sensations of Tone as a Physiological

Basis for the Theory of Music" (second ed.). Longmans, Green (1885). p. 23.

Manning, Peter. 2004. Electronic and Computer Music. p.391-2

Schwarz, Diemo, "Concatenative Sound Synthesis: The Early Years". 2006.

Schwarz, Diemo, and Gregory Beller, Bruno Verbrugghe, Sam Britton. "Real-Time Corpus-Based Concatenative Synthesis with CataRT". 2006.

Truax, Barry. "Organizational Techniques for C:M Ratios in Frequency Modulation", Computer Music Journal, 1(4), 1978, pp. 39-45. MIT Press, 1985.